Image credit: Apple

1 Facebook x.com Reddit

1 Facebook x.com Reddit

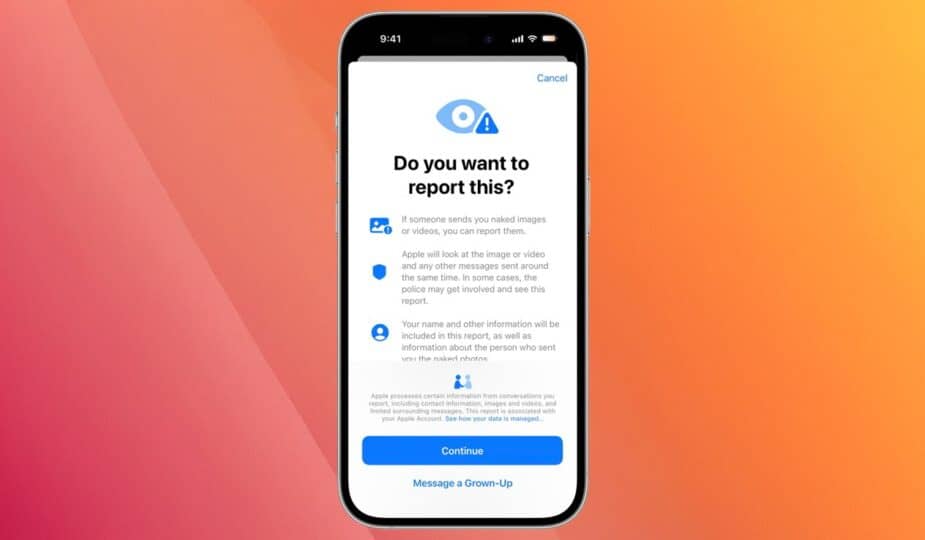

A new feature introduced as part of the iOS 18.2 beta allows children in Australia to report inappropriate content directly to Apple.

The feature is an extension of Apple’s security measures included in iOS 17. These features automatically detect images and videos containing nudity from iMessage, AirDrop, FaceTime, and Photos.

Initially, when triggered, two pop-up windows would appear asking for intervention. They would explain how to contact authorities and ask the child to alert a parent or guardian.

Now, when nudity is detected, a new pop-up window will appear. Users will be able to report images and videos directly to Apple, which could then send the information to authorities.

When the alert appears, the user’s device will generate a report including any offensive material, messages sent immediately before or after the material, and contact information for both accounts. Users will be given the opportunity to fill out a form describing what happened.

Once Apple receives the report, the content will be reviewed. The company may then take action against the account, including disabling the user’s ability to send messages via iMessage and reporting the issue to law enforcement.

The feature is currently rolling out as part of the iOS 18.2 beta in Australia, but will be rolled out globally at a later date.

As The Guardian notes, Apple likely chose Australia because the country will require companies to monitor child abuse and terrorism-related content on cloud-based messaging services by the end of 2024.

Apple has warned that the draft code will not protect end-to-end encryption, leaving users’ messages vulnerable to mass surveillance. Apple has been formally opposed to such efforts since late 2018.

Apple has come under fire for its handling of child sexual abuse material (CSAM) on its platforms. Initially, the company was accused of not taking CSAM protection seriously, which angered many watchdog groups.

In 2021, Apple planned to roll out CSAM protection that would scan users’ iCloud photos for known CSAM images. If an image was found, Apple would review it and then send a report to the National Center for Missing & Exploited Children (NCMEC).

Many users were outraged by the idea of Apple scanning their personal images and videos and worried about false positives. Apple eventually abandoned the idea, citing concerns that scanning the data “would create new threat vectors for data thieves to find and exploit.”

In 2024, the UK's National Society for the Prevention of Cruelty to Children (NSPCC) said it had found more cases of violent images posted on Apple platforms in the UK than Apple reported globally.

Follow AppleInsider on Google News