Apple to Keep Nudity Detection in Images, Drop Some CSAM Protection Features in 2022

5 Facebook x.com Reddit

5 Facebook x.com Reddit

Child Sexual Abuse Victim Files is suing Apple over its 2022 decision to abandon a previously announced plan to scan images stored in iCloud for child sexual abuse material.

Apple initially unveiled a plan in late 2021 to protect users from child sexual abuse material (CSAM) by scanning uploaded images on the device using a hashtag system. It would also warn users before sending or receiving photos with algorithmically detected nudity.

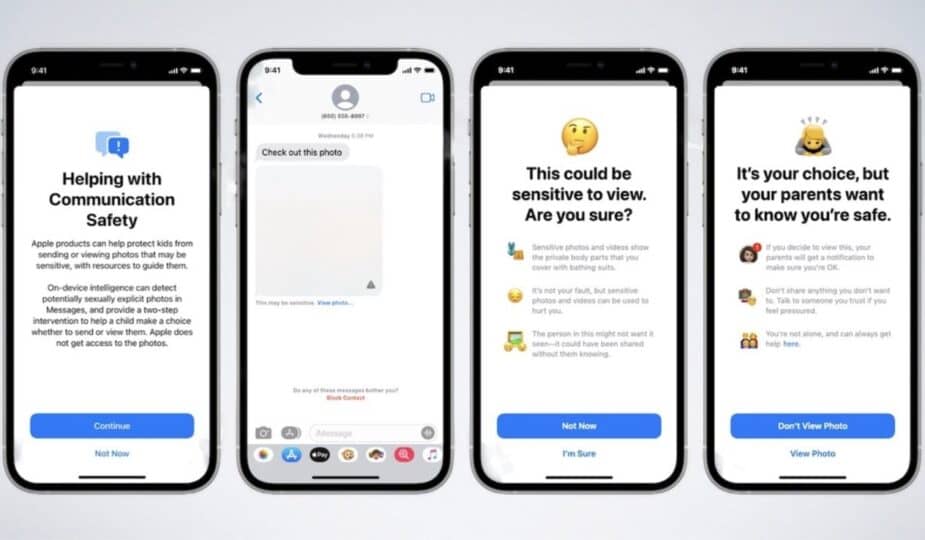

The nudity detection feature, called Communication Safety, is still in effect today. However, Apple backed away from its CSAM detection plan after backlash from privacy experts, child safety groups, and governments.

A 27-year-old woman who was sexually abused by a relative as a child has sued Apple, using a court-approved pseudonym, for disabling the CSAM detection feature. She says she previously received a notification from law enforcement that images of her abuse were stored in iCloud via a MacBook seized in Vermont while the feature was active.

In her lawsuit, she alleges that Apple broke its promise to protect victims like her when it removed the CSAM scanning feature from iCloud. By doing so, she says, Apple allowed the material to be widely disseminated.

In doing so, Apple is selling “defective products that have harmed a class of customers” like herself.

More Victims Join Lawsuit

A woman's lawsuit against Apple seeks changes to Apple's practices and potential compensation for a class of 2,680 other eligible victims, according to one of the woman's lawyers. The lawsuit notes that the CSAM scanning features used by Google and Facebook Meta catch far more illegal material than Apple's anti-nudity feature.

Under current law, child sexual abuse victims can receive at least $150,000 in damages. If all potential plaintiffs in the woman's lawsuit are awarded compensation, Apple's damages could exceed $1.2 billion if the company is found liable.

In a related case, lawyers acting on behalf of a nine-year-old CSAM victim sued Apple in August. In that case, the girl alleged that strangers sent her CSAM videos via iCloud links and “encouraged her to film and upload” similar videos, according to The New York Times, which reported on both cases.

Apple filed a motion to dismiss the North Carolina case, noting that Federal Code Section 230 protects it from liability for material uploaded to iCloud by its users. It also argued that it is immune from product liability claims because iCloud is not a standalone product.

Court decisions soften Section 230 protections

However, recent court decisions may work against Apple’s claims to avoid liability. The U.S. Court of Appeals for the Ninth Circuit ruled that such protections can only apply to active content moderation, not as a blanket shield against potential liability.

Apple spokesman Fred Sainz said in response to the new lawsuit that Apple finds “child sexual abuse material abhorrent, and we are committed to combating the ways predators put children at risk.”

Sainz added that “we are urgently and aggressively innovating to combat these crimes without compromising the safety and privacy of all our users.”

He pointed to the expansion of nudity detection features in its Messages app, as well as the ability for users to report harmful material to Apple.

The woman behind the lawsuit and her lawyer, Margaret Mabey, disagree that Apple has done enough. In preparing for the case, Mabey pored over law enforcement reports and other documents to find cases involving images of her clients and Apple products.

Mabey eventually compiled a list of more than 80 examples of images that were being shared. One of the people who shared the images was a Bay Area man who was caught with more than 2,000 illegal images and videos stored in iCloud, the Times noted.

Follow AppleInsider on Google News